Introduction

Nginx¹ is a web server that can also be used for serving static files, reverse proxy, load balancer, mail proxy, and HTTP cache.

It's written in C² so it's blazing fast and has a small memory footprint.

Installation

We will use Ubuntu 20.04 for this tutorial. You can use any other Linux distribution or even Windows or Mac but the installation process will be different.

sudo apt update

sudo apt install nginx

sudo nginx -vTo start/stop/restart the Nginx service, run the following commands:

sudo systemctl start nginx

sudo systemctl stop nginx

sudo systemctl restart nginxChecking the status

To check the status of the Nginx service, run the following command:

sudo systemctl status nginxWe should see something like this:

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; disabled; vendor preset: enabled)

Active: active (running) since Sun 2023-11-05 11:05:40 +03; 26s ago

Docs: man:nginx(8)

Process: 3819323 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Process: 3819324 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS)

Main PID: 3819325 (nginx)

Tasks: 13 (limit: 18707)

Memory: 10.3M

CGroup: /system.slice/nginx.service

├─3819325 nginx: master process /usr/sbin/nginx -g daemon on; master_process on;

├─3819326 nginx: worker process

├─3819327 nginx: worker process

├─3819328 nginx: worker process

├─3819329 nginx: worker process

├─3819330 nginx: worker process

├─3819331 nginx: worker process

├─3819332 nginx: worker process

├─3819333 nginx: worker process

├─3819334 nginx: worker process

├─3819335 nginx: worker process

├─3819336 nginx: worker process

└─3819337 nginx: worker process

Nov 05 11:05:40 blackbird systemd[1]: Starting A high performance web server and a reverse proxy server...

Nov 05 11:05:40 blackbird systemd[1]: Started A high performance web server and a reverse proxy server.We can grasp some useful information from this output:

- The service is active and running

- There are one master process and 12 worker processes

- The memory footprint is 10.3M

We will come back to footprint and worker processes later.

As a Reverse Proxy

The main configuration file is located at /etc/nginx/nginx.conf.

We can add our own configuration files to /etc/nginx/conf.d/ and include them in the main configuration file.

Let's create a new file called myapp.conf:

sudo vim /etc/nginx/conf.d/myapp.confAnd add the following configuration:

server {

listen 80;

server_name myapp.com;

location / {

proxy_pass http://localhost:3000;

}

}Explanation

serveris a contextlistenis a directive80is the port numberserver_nameis a directivemyapp.comis the domain namelocationis a context/is the pathproxy_passis a directivehttp://localhost:3000is the target URL

So we are telling Nginx to listen on port 80 for requests coming to myapp.com and proxy them to http://localhost:3000.

Testing the configuration

To test the configuration, run the following command:

sudo nginx -tWe should see something like this:

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successfulReloading the configuration

To reload the configuration, run the following command:

sudo nginx -s reloadHosts File

We need to add the following lines to the hosts file because we don't have a DNS server:

sudo vim /etc/hosts127.0.0.1 myapp.comCreating Mock Http Server and Testing the Configuration

Let's create a mock http server using Python

python -m http.server 3000Now let's test the configuration by visiting http://myapp.com in the browser.

Voila! We can see the static content served by the mock http server.

As a Reverse Proxy and Load Balancer

Let's create another Nginx configuration file called reverse.conf:

sudo vim /etc/nginx/conf.d/reverse.confAnd add the following configuration:

upstream reverse {

server localhost:3000;

server localhost:3001;

}

server {

listen 80;

server_name reverse.com;

location / {

proxy_pass http://reverse;

}

}Explanation

upstreamis a contextreverseis the name of the upstreamserveris a directivelocalhost:3000is the first serverlocalhost:3001is the second serverlocationis a context/is the pathproxy_passis a directivehttp://reverseis the upstream name

Testing the configuration

To test the configuration, run the following command:

sudo nginx -tWe should see something like this:

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successfulReloading the configuration

To reload the configuration, run the following command:

sudo nginx -s reloadHosts File

We need to add the following lines to the hosts file because we don't have a DNS server:

sudo vim /etc/hosts127.0.0.1 reverse.comCreating Mock Http Servers and Testing the Configuration

Let's create two mock http servers on two different directories using Python

cd /some/directory

python -m http.server 3000

cd /some/other/directory

python -m http.server 3001Now let's test the configuration by visiting http://reverse.com in the browser.

We can see the static content served by the first mock http server. If we refresh the page, we can see the static content served by the second mock http server. This is because default load balancing method of Nginx is round-robin.

Voila2! We created a reverse proxy and load balancer.

Advanced configurations

We can configure the load balancing method, number of worker processes, and other stuff.

For example, we can configure the load balancing method to one of the following:

least_connip_hashhashrandomrandom twoleast_timeleast_time headerleast_time last_byteleast_time last_byte inflightleast_time last_byte inflight duration

We can set the number of worker processes to the number of CPU cores or a custom value as follows:

worker_processes auto;

// worker_processes 4;We can also set the number of worker connections as follows:

events {

worker_connections 1024;

}There are many other configurations that we can set. You can find them in the official documentation.

Footprint

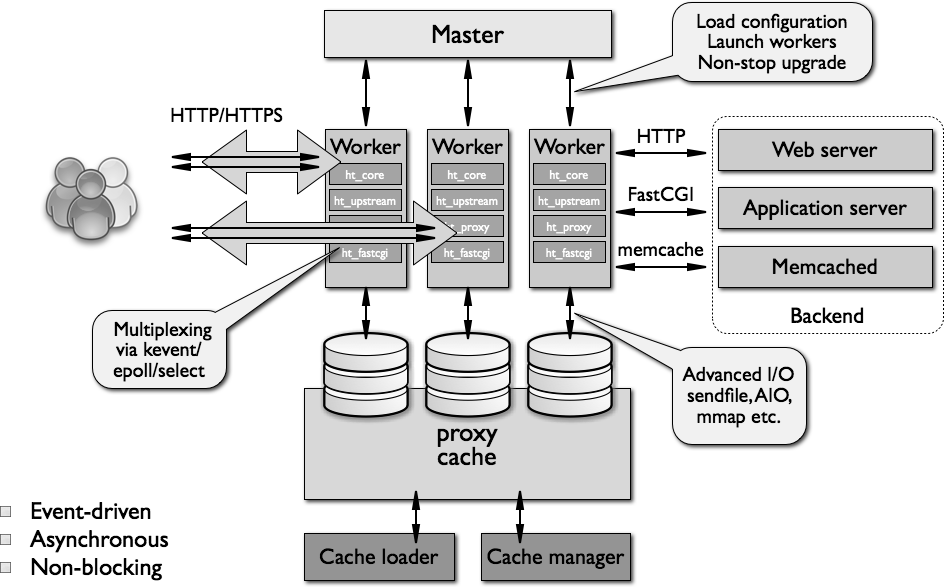

Nginx requires a small amount of memory to run compared to other web servers like Apache Tomcat and Apache Httpd. This is because Nginx is single-threaded, event-driven, and has asynchronous I/O.

From aosabook about Nginx³:

As previously mentioned, nginx doesn't spawn a process or thread for every connection. Instead, worker processes accept new requests from a shared "listen" socket and execute a highly efficient run-loop inside each worker to process thousands of connections per worker. There's no specialized arbitration or distribution of connections to the workers in nginx; this work is done by the OS kernel mechanisms. Upon startup, an initial set of listening sockets is created. workers then continuously accept, read from and write to the sockets while processing HTTP requests and responses.

The run-loop is the most complicated part of the nginx worker code. It includes comprehensive inner calls and relies heavily on the idea of asynchronous task handling. Asynchronous operations are implemented through modularity, event notifications, extensive use of callback functions and fine-tuned timers. Overall, the key principle is to be as non-blocking as possible. The only situation where nginx can still block is when there's not enough disk storage performance for a worker process.

Because nginx does not fork a process or thread per connection, memory usage is very conservative and extremely efficient in the vast majority of cases. nginx conserves CPU cycles as well because there's no ongoing create-destroy pattern for processes or threads. What nginx does is check the state of the network and storage, initialize new connections, add them to the run-loop, and process asynchronously until completion, at which point the connection is deallocated and removed from the run-loop. Combined with the careful use of syscalls and an accurate implementation of supporting interfaces like pool and slab memory allocators, nginx typically achieves moderate-to-low CPU usage even under extreme workloads.

Alternatives

Varnish is an excellent alternative to Nginx. It's also blazing fast and has a small memory footprint. It's also written in C and has a configuration language called VCL. It's mostly used as a HTTP cache and reverse proxy.

Conclusion

There are tons of features that I didn't cover like caching, gzip compression, SSL, static file serving, and many more.

I hope you enjoyed this tutorial and learned something new.

³ aosabook